Introduction to NLP: When AI talks

Introduction to NLP: When AI talks

The field of Artificial intelligence is exciting. Under this discipline of Technology, machines can talk, machines can see, and machines can think. Computer Vision allows machines to see. Conversational AI systems allow machines to talk. Deep learning allows machines to mimic human thinking.

Natural Language Processing (NLP) is an interdisciplinary field that combines linguistics, computer science, and artificial intelligence. It aims to enable computers to understand and communicate with humans using natural language. NLP involves developing algorithms and systems that can process and analyse large volumes of language data, and use it to interact with humans.

Interestingly, NLP began in the 1940s after World War II when people recognized the importance of translating languages using a machine. They wanted a machine that could do this automatically, and thus aid in communicating effectively without physical and language barriers. The 1960s saw some early forms of NLP, which included SHRDLU - a natural language system that worked with restricted vocabularies.

Then, after nearly 60 years, innovations under NLP saw its biggest boost yet – thanks to Transformers.

In 2017, Google released its famous "Attention is All You Need" research paper, which introduced a new network architecture called the Transformer. The Transformer was a breakthrough in Natural Language Processing (NLP) because it relied only on attention mechanisms. This enabled the model to pay attention to different parts of the input sequence when generating an output.

Moreover, Transformers did not use recurrence or convolutions – which are two different types of neural networks used in deep learning. This allowed these Transformer models to be superior in quality, as well as fast to train.

But how are NLP used in modern-day enterprises? What are the requirements to build your own NLP models? And finally, what are things we should keep in mind before using these NLP models in our organisations.?

NLP Techniques and Methods: How does AI understand human languages and responses

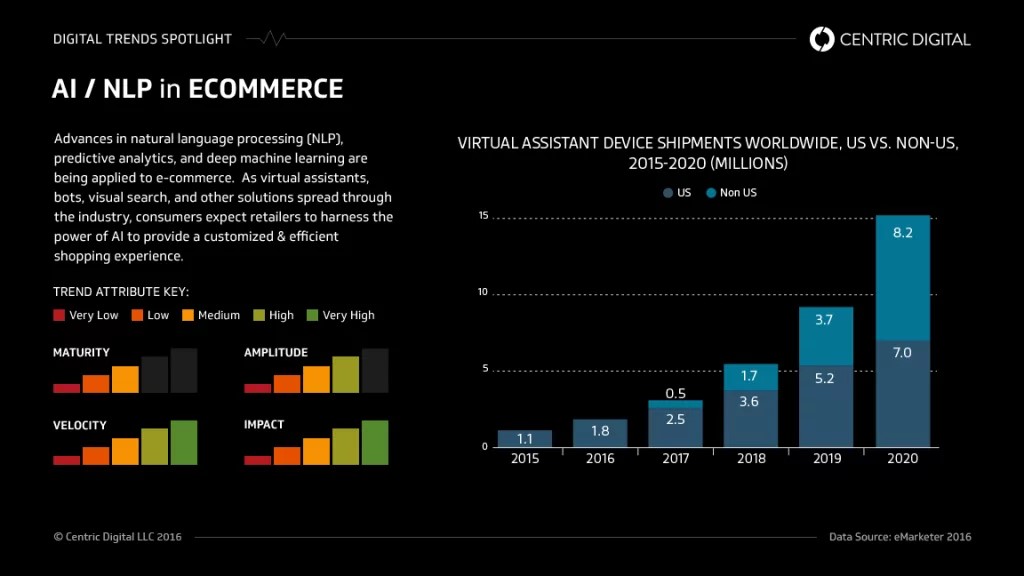

The interest in AI and NLP are growing at an exponential rate rate. Source: DZone Big Data

Natural Language Processing (NLP) uses a variety of techniques and methods to process and analyse human language. However, the whole process of building an NLP model can be classified as the culmination of three processes - computational linguistics, deep learning, and (lots of) statistics.

Computational linguistics refers to combining natural language and computational approaches to linguistic questions. Deep learning is a subfield of artificial intelligence that uses neural networks to learn from large amounts of data and make predictions based on these data. Statistics is also used in NLP to refine the training data, develop algorithms, and build models on top of these training data and algorithms.

Over recent years, the field of NLP has seen a wide range of applications. Some prominent applications of NLP used by modern enterprises are listed below:

- Chatbots and Virtual Assistants: NLP is being used to develop chatbots and virtual assistants like ChatGPT and Amazon’s Alexa respectively. The understanding of NLP allows these applications to interact with customers, providing them with information and assistance in a natural way that is understandable by humans.

- Speech Recognition: NLP often deals with the tasks of converting spoken words into text. This technology is used in applications such as text-to-speech, automated call centres, and even voice-enabled verification systems.

- Email Filtering: With the power of NLP, one can teach a system to filter and categorise emails based on their content. Moreover, by reading the content of emails, these models can label the messages as well. This helps in prioritising emails and reducing the time spent on email management.

- Language Translation: This was the main foundational reason for which NLP came into existence. NLP translation software can be used to translate text from one language to another. This technology is used in applications such as Google Translate and other language translation software.

- Proofreading: NLP leverages its language skills to enhance grammar-checking software and autocorrect functions. This technology is used in applications such as Grammarly and other grammar-checking software, and modern-day autocorrect apps.

On the technical front, there are three steps taken to train an NLP: data acquisition, data training, and data fine-tuning. The model takes a text input (raw data) and splits it into smaller units that the machine can understand. The model then extracts useful information from these units and applies machine learning algorithms to process it. The machine repeats this process with some adjustments to improve the output, and the machine learns progressively.

Techniques such as Tokenization, Stemming, Lemmatization and Sentiment Analysis are used to produce texts that are comprehensible to humans.

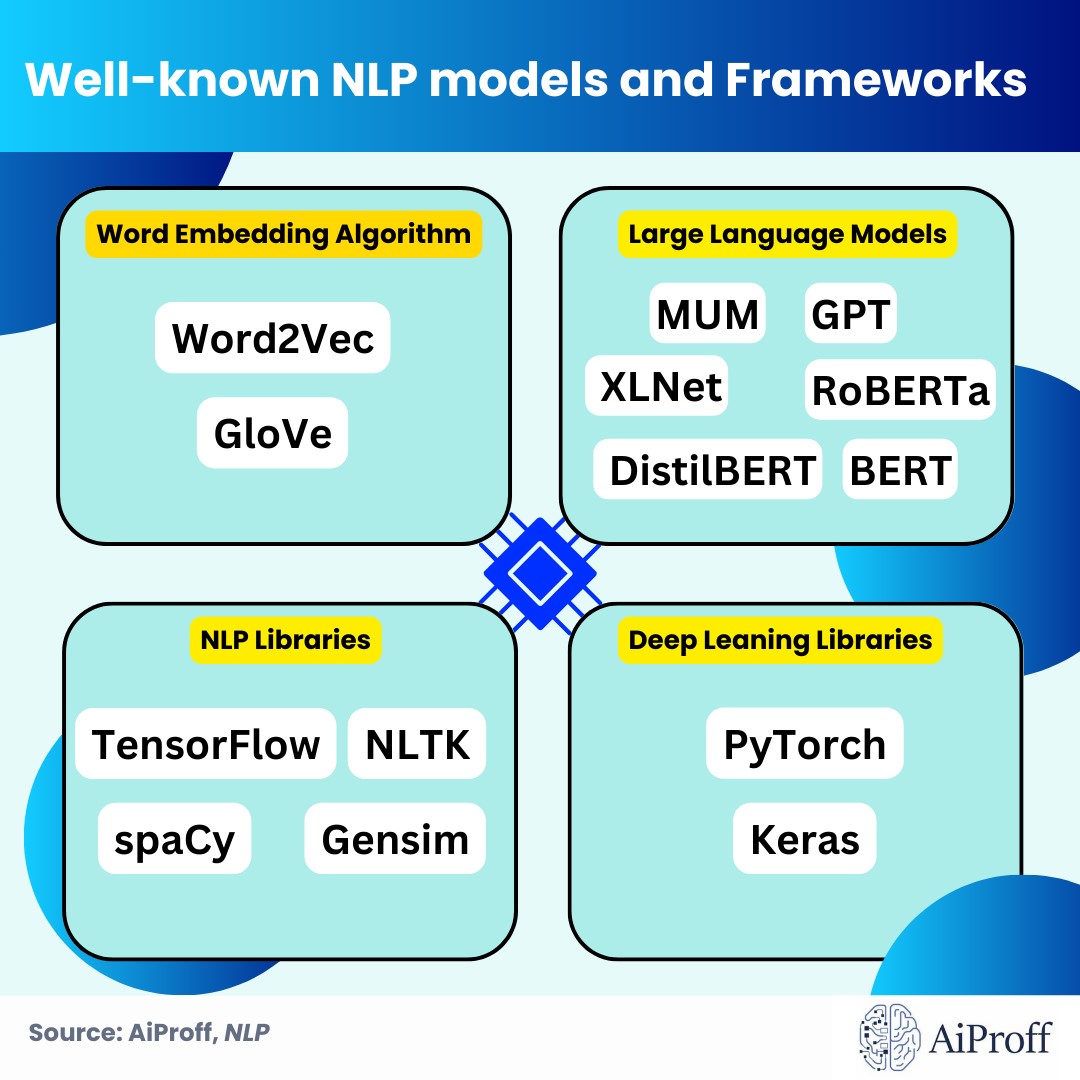

Here are some of the well-known NLP models and frameworks:

- TensorFlow: One of the most popular open-source frameworks for NLP and machine learning is TensorFlow, which was created by Google. TensorFlow is widely used for NLP tasks and has a large community of developers.

- BERT: BERT (Bidirectional Encoder Representations from Transformers) is a pre-trained NLP model which is also developed by Google. It can handle different tasks such as identifying the sentiment, answering questions, and named entity recognition.

- GPT-3: GPT-3 (Generative Pre-trained Transformer 3) is a popular transformer-based NLP model developed by OpenAI. It is capable of performing a wide range of NLP tasks such as generating code, doing simple maths calculations, and text generation.

- NLTK: NLTK (Natural Language Toolkit) is a popular open-source library for NLP in Python. It provides a wide range of tools for analysis and text processing. It supports various functionalities such as tokenization, tagging, and semantic analysis.

- Word2Vec: Word2Vec is a neural network-based model for word embedding developed by Google. It is widely used for text classification, sentiment analysis, and other NLP tasks

NLP Benefits and Shortcomings: Misinformations and Hallucinations

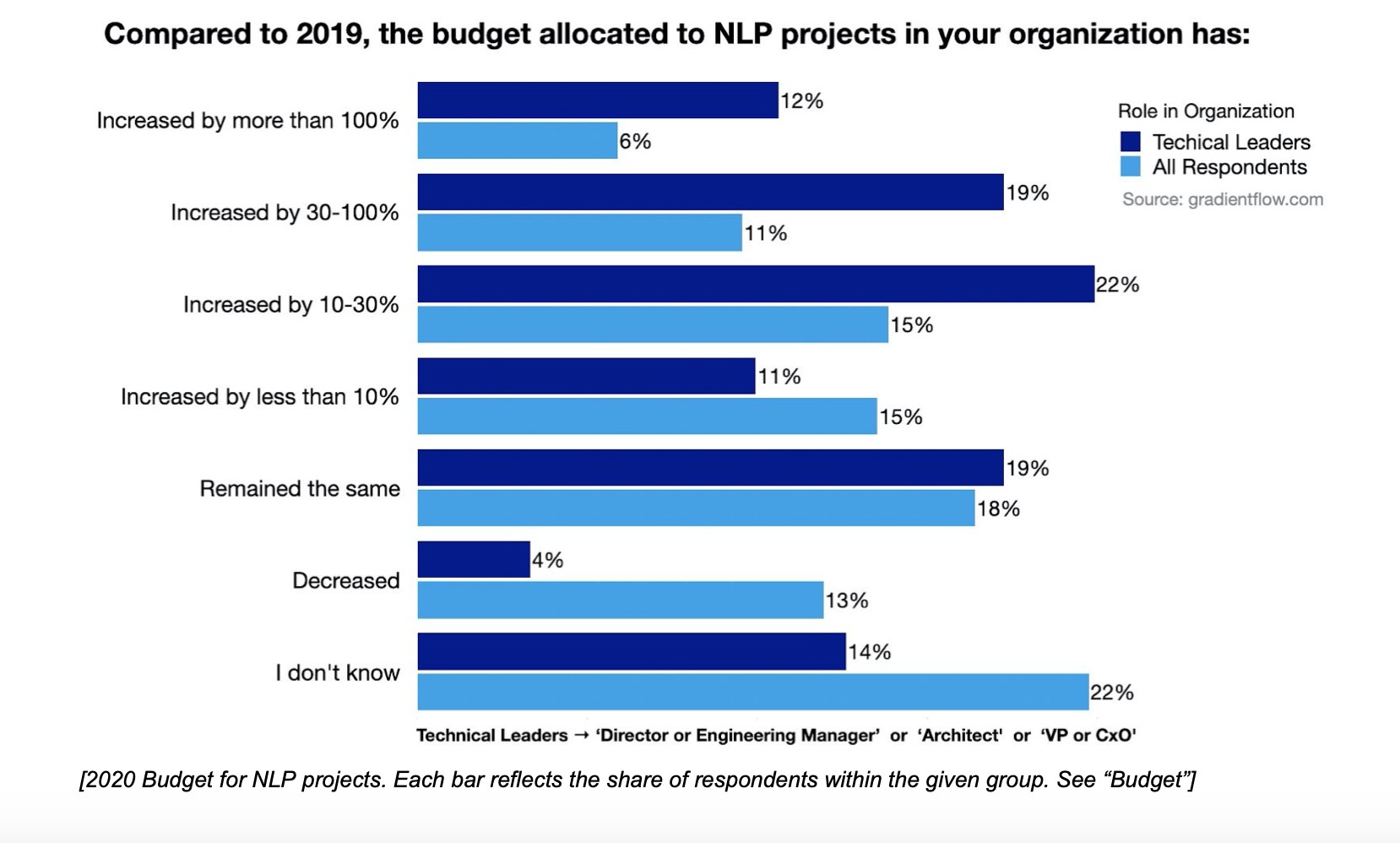

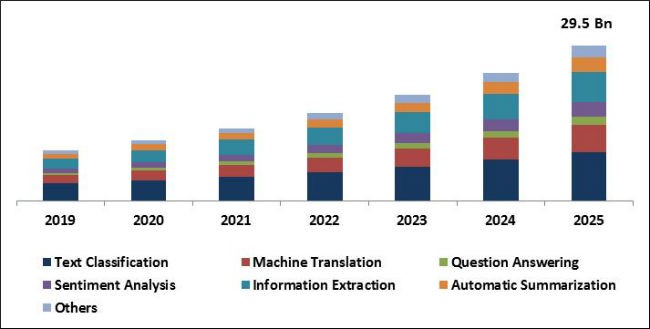

Natural language processing (NLP) is a rapidly growing field in the AI industry. According to forecasts, the global NLP market size will reach 43.9 billion U.S. dollars by 2025. The reason for this growth is that NLP is becoming an essential tool for analytics and knowledge creation in various domains, as enterprises increasingly rely on big data to gain insights and value.

2020 NLP survey. Source: prweb.com

The following four qualities of NLP make it a promising technology for enterprises-

- NLP can Perform large-scale analysis: NLP systems can process and interpret large amounts of data in a very short time. They can discover patterns that are hard or tedious for humans to find, allowing employees to boost their productivity with NLP applications and collaborate on a common objective. NLP is already applied to various domains such as processing unstructured text data, social media analytics, customer support tickets, online reviews, and more.

- Automate processes in real-time: Machines can automate tasks in a fast way, and AI can automate tasks in a smart way. NLP tools would help machines to sort and carry out operations on information with very little or no human interaction. NLP tools, along with the supervision of humans, can do tasks in a quick, efficient, and accurate manner.

- Searching and Querying huge corpus of unstructured data: Generative AI is using NLP to make the task of querying image data searching easier. This is useful in institutions like healthcare and law, where NLP apps can quickly and efficiently access the records that date back to 20-30 years. Moreover, using NLP, you can not only retrieve information from a huge sea of unstructured data, but also run analysis over it to find key trends or patterns.

- Real time analysis from a large pool of data: NLP techniques can enable applications to analyse large amounts of data in real time. For instance, MeetGeek and Otter Ai are applications that can accurately capture and summarise the key points of a meeting with multiple participants. This can enhance the productivity of the meeting by saving time and effort that would otherwise be spent on manual transcription and summarization.

NLP, thus, has limitless potential for data-driven industries. This innovative technology can revolutionise any field that involves data and analysis.

However, no matter how revolutionary this field of AI is, it is not devoid of flaws. Just like any other AI application, NLP apps also have inherent drawbacks – hallucination and misinformation.

One of the ethical challenges of AI systems is that they can produce false or harmful information or stereotypes. This can occur when the AI model creates text that is not grounded in facts, but on its own prejudices, lack of real-world knowledge, or flaws of the data it was trained on.

These are known as hallucinations, and they can have severe negative consequences for society, especially when the language model is confident in its output, which can deceive or misinform people on a large scale.

Misinformation, which is caused as an aftermath of hallucination, can potentially perpetuate harmful stereotypes or misinformation, making AI systems ethically questionable.

Hallucinations can potentially cause society widespread panic and misinformation on a global scale, as language models are confident in their answers, which can lead to serious consequences.

Thus, improper implementation of NLP applications in your enterprise could lead to heavy costs if they behave erroneously. This apparent risk only increases in magnitude as the scale of the enterprise increases.

Solutions & AiProff Assistance

The good news is, there are many ways through which you can overcome these shortcomings.

First, you can improve the quality and diversity of your training data. This will help your model avoid hallucination and misinformation, as it will have more and varied data to learn from.

Moreover, identifying and reducing the inherent biases in the data also makes these models less susceptible towards generating erroneous responses.

Second, you can use better regularisation methods. Regularisation methods are methods that help prevent overfitting and other issues in the AI model that could lead to hallucinations.

Additionally, employing adversarial training and reinforcement learning also improves the robustness of NLP models and reduces the risk of hallucinations.

Third, you can apply more constraints on the model's output, such as limiting the length of responses or limiting the scope of facts. This will help your model generate more relevant and accurate responses.

As we can see, AI can offer many benefits for your organisation. However, the correct implementation of AI in your organisation is important.

It may seem easy to use AI-based solutions for various tasks, but the reality is that NLP apps, like any other deep learning applications, are not always reliable. The reason for this is that the more data and complexity you have, the more unpredictable the deep learning architecture becomes.

The Diversity in AI and NLP, and interest in this field over the decade, has been growing. Source: kbvresearch.com

Right now, NLP is one of the most important and influential fields in the age of AI. However, NLP also faces many limitations and challenges that need to be addressed.

To make the best use of NLP, one needs to understand how the NLP model works and design the optimal way to implement it in the organisation.

This is where AiProff can assist you. AiProff is a leading company in the AI domain that enables you to discover the endless opportunities that AI provides. We are a group of skilled experts with a wealth of knowledge and experience in machine learning, artificial intelligence, and data science.

We not only know how to build machine learning models but also how to detect and prevent vulnerabilities and biases that can lead to erroneous or harmful outcomes.

We excel at creating state-of-the-art solutions as Minimum Viable Products for Enterprises and Academic Institutions to lower the entry barrier – using cutting-edge AI/ML solutions – and expedite time to market.

Interested in making your revolutionary products/services using AI? Contact us:

Don’t let your critical and essential AI/ML workloads be at the mercy of naive assumptions.

Let’s secure and safeguard your innovation, and efficiencies to establish a robust and sustainable growth trajectory.

Introduction to NLP: When AI talks

Related Articles

Sept 22, 2023

Introduction: When AI starts to predict Future

Forecasting is the art and science of predicting future events or outcomes based on past and present data.

Sept 23, 2023

Applied AI: When AI solves real world problems

Applied AI: When AI solves real world problems Artificial Intelligence is a promising technological

Sept 22, 2023

Introduction to Computer Vision: When Machines Start to See

The quest for intelligence has been going on for ages, and the question of